Overview

This guide walks through seven connected moves: tightening technical SLA definitions, introducing agentic AI for Tier 1 and Tier 2 tickets, turning incident response into reusable “Support as Code,” automating FinOps, embracing self-healing AIOps, hard-measuring success, and wrapping it all in a platform engineering model. Short case studies in each step show how global companies already apply these ideas.

By the end, you will know how to build a support model that meets budget, resiliency, and customer experience goals - without adding manual toil.

Set Clear Outcomes and Technical SLAs Up Front

Even the best automation fails if nobody agrees on what “good” looks like. Sharpening your technical SLA (service-level agreement that measures uptime, latency, and recovery time) forces alignment between business owners, finance, and operations.

-

Document availability in decimal-place precision - e.g., 99.95 % instead of “four nines.”

-

Define latency, which is simply the delay before a transfer begins, in milliseconds to expose true user experience.

-

Map every SLA to a cost range so Finance sees the trade-offs between resiliency and spend.

Many teams skip the promotion of self-service portals, yet 60% of customer service agents still fail to promote self-service options, leaving hidden savings on the table. A written SLA that mandates self-service as the first line of defense fixes this gap.

If you’re seeking structured guidance on SLA requirements and practical frameworks, explore How Managed IT Services Empower Business Growth.

By closing this section, you know exactly what “good” means and why the next step - automating the easy work - will stick.

How Precise SLAs Reduced Support Calls by 18%

A European fintech rewrote its SLA from “near real-time” to “50 ms p95 latency.” The precise target justified an upgrade to global load-balancing and immediately cut support calls by 18 % when customers saw consistently faster payments.

Automate Tier 1 and Tier 2 With Agentic AI and Service Desk Automation

Now that success is measurable, remove the human bottleneck from the simplest tickets. Agentic AI is a large-language-model-based assistant that can reason through multi-step workflows instead of spitting out canned answers. Pair it with service desk automation so routine problems fix themselves.

-

Classify incoming incidents by using a natural-language model that tags urgency and component.

-

Train a second model to suggest code snippets, Terraform modules, or runbooks.

-

When confidence is high, let the AI execute safe actions - restart a pod, reassign a network route, or roll back a deployment.

67% of IT operations teams report using AI or automation in some form, yet only 19% actually optimize cloud workloads with it. Elevating agentic AI from chatbot to action bot closes this gap.

Your service desk now resolves the bottom 40–60 % of tickets without a person, clearing the runway for deeper automation.

For more on deploying automation and AI to accelerate IT support and operations, see Cloud Support: How Managed DevOps Keeps Your Business Online 24/7.

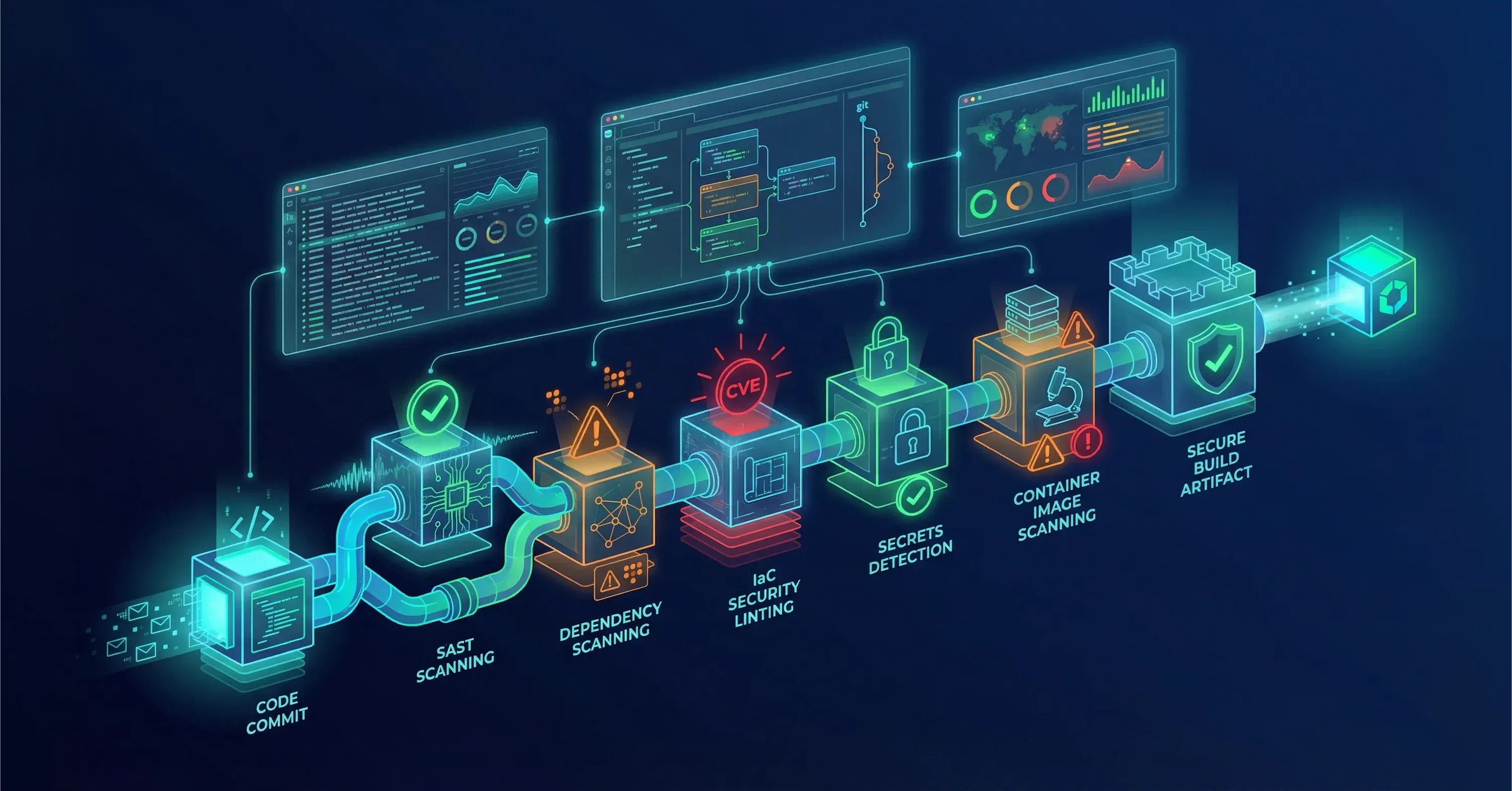

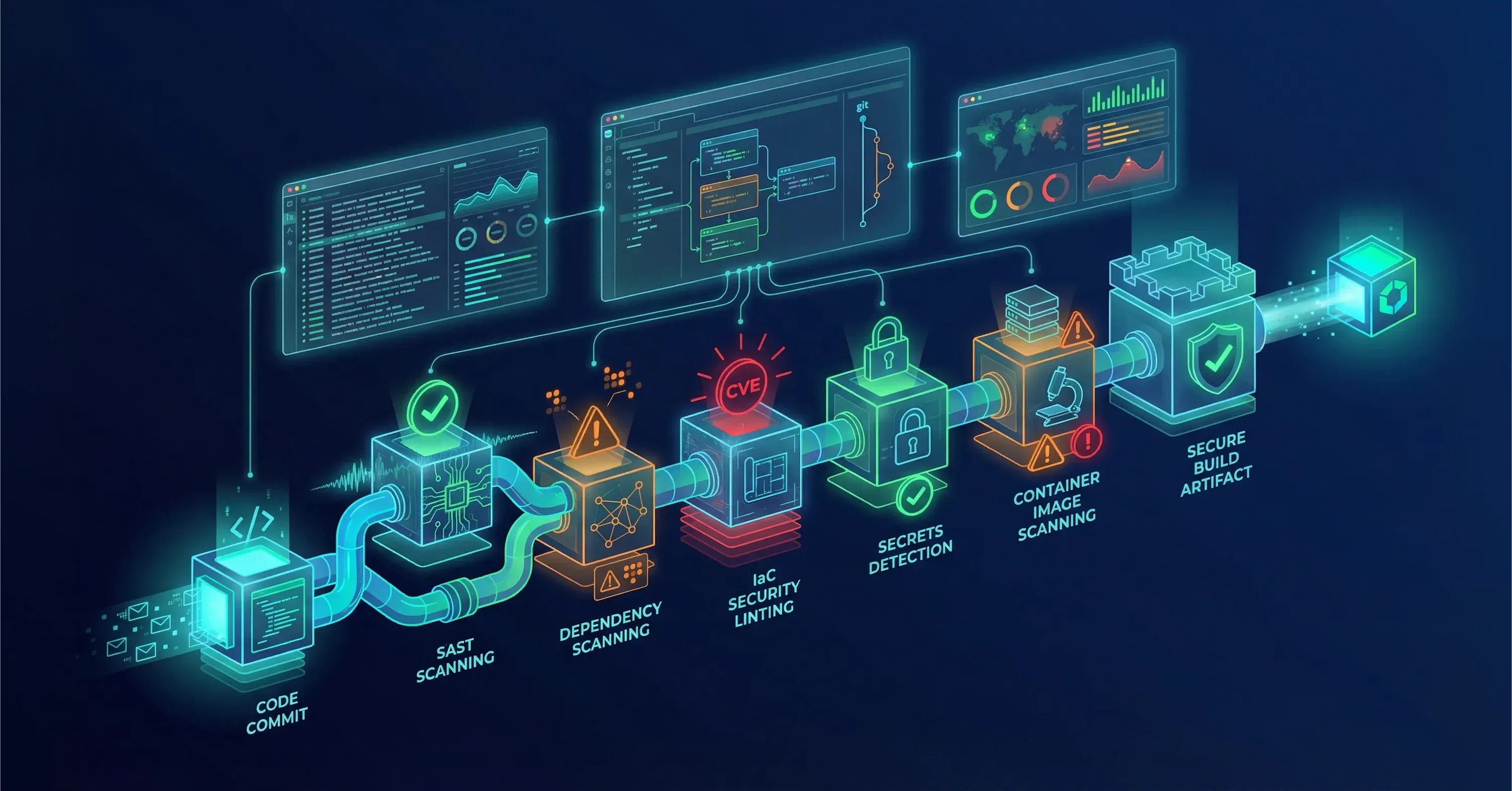

Encode Remediation as “Support as Code”

Manual runbooks decay. Support as Code turns every stable fix into a version-controlled script or workflow.

-

After an incident is closed, require the engineer to push the final command sequence to Git.

-

Use Infrastructure as Code tools such as Pulumi or Terraform to embed the logic that checks prerequisites, rolls back on error, and documents the outcome.

-

Sync these scripts with the AI agents so they always pull the latest remediation playbook.

Because every fix is both executable and reviewable, on-call engineers trust the system, and auditors gain a clear trail.

For a detailed analysis of automation, environment consistency, and DevOps-driven remediation, see From Code to Customer: Accelerating Innovation with Cloud DevOps.

How Support as Code Kept Black Friday Queries Under 150 ms

A SaaS analytics vendor stored 300 common fixes as Terraform modules. During Black Friday, an IoC script auto-expanded the database cluster in seconds, keeping query time below the 150 ms SLA without paging anyone.

Embed FinOps Automation to Control Spend

Support is not only about uptime. Cloud costs can balloon if remediation routines scale indiscriminately. FinOps automation lets Finance and Engineering trust each other’s numbers.

-

Pull real-time cost data from the cloud provider billing API.

-

Train an optimization model to spot idle resources and recommend rightsizing or shutdown.

-

Trigger policy-as-code to enforce tag compliance, savings plans, or schedule changes.

As cloud contracts evolve, remember that 35% of top-performing companies plan to renegotiate cloud provider pricing, contracts, and SLAs. Automation makes renegotiation less urgent because waste is surfaced instantly.

If you want an end-to-end playbook for managing migration costs and instituting FinOps, read The Cloud Cost Paradox: Why Migration Spikes Your Budget – And How a FinOps Solutions System Fixes It.

Shift Toward AIOps and Self-Healing Architectures

With Tier 1 and Tier 2 automated and costs tamed, move to prediction and prevention. AIOps combines machine learning with observability data to spot anomalies hours before they trigger an incident.

-

Aggregate traces, metrics, and logs in an ingestion lake.

-

Apply unsupervised learning to detect novel patterns.

-

Route pre-emptive fixes through Support-as-Code workflows.

Self-healing means the platform not only spots a rising memory leak but also restarts the container, patches the code from a known repository, and validates health probes - without a 3 a.m. phone call.

Dive deeper into predictive operations and intelligent monitoring with Be Cloud: The Next-Gen Platform for Scalable Business.

Zero Customer Impact Through Predictive Operations

During a streaming service launch, an AIOps system detected a deadlock pattern after 1 percent of users experienced buffering. The platform auto-deployed a sidecar patch and prevented the incident from escalating. No customer noticed.

Measure Success With the Engineer-to-Instance Ratio

By 2026, CFOs will look at a single chart: number of cloud instances divided by full-time engineers managing them. The goal is a ratio above 1:2,000 for mature SaaS providers.

To improve the metric:

-

Count every Kubernetes pod, VM, database node, and managed service instance.

-

Exclude developers from the denominator - only operations staff are relevant.

-

Track the ratio monthly and set quarterly improvement targets.

Because 24% more IT professionals planned automation investments in 2024 than in 2023, boards expect this number to rise quickly. Companies failing to automate will lose competitive margin.

To see how organizations maximize talent and automation in production, check out The Managed DevOps Cheat Sheet: how to cut App Development Time and Costs by 80%.

Scaling Cloud Operations While Freeing Budget for Cybersecurity

A leading provider of managed IT services raised its Engineer-to-Instance ratio from 1:400 to 1:2,700 by combining agentic AI, Support as Code, and a strict FinOps policy. The savings funded a cybersecurity upgrade without increasing total spend.

Wrap Everything in a Platform Engineering Team

Platform engineering turns the above capabilities into a product consumed by internal developers. The team owns the paved road - standard APIs, golden paths, and documentation - so application teams never reinvent support mechanics.

-

Offer self-service templates for microservices, data pipelines, and event streams.

-

Provide “support hooks” that auto-register new workloads with monitoring, FinOps tags, and SLA dashboards.

-

Hold weekly office hours to gather feedback and refine self-service features.

Because 84% of cloud buyers now bundle customer services and support into their contracts, platform engineering ensures your company offers the same seamless experience internally as vendors provide externally.

What Is a Scalable Cloud Services Support Model?

A scalable cloud services support model is a fully automated framework in which technical SLAs are codified, Tier 1–2 issues are resolved by agentic AI, incident fixes are stored as version-controlled scripts (Support as Code), cost controls run through hands-off FinOps automation, and AIOps predicts failures so a small platform engineering team can manage thousands of instances.

Conclusion

Building a cloud services support model that scales is no longer about throwing people at tickets. By codifying SLAs, deploying agentic AI, converting fixes into reusable code, automating cost controls, leaning on AIOps, tracking the Engineer-to-Instance ratio, and productizing everything through platform engineering, you create a cloud that largely runs itself - freeing your experts to focus on the next wave of innovation.