What You Will Learn

This guide moves from market realities to hands-on tactics.

You will see:

-

How rising cloud use changes risk management and compliance in cloud environments

-

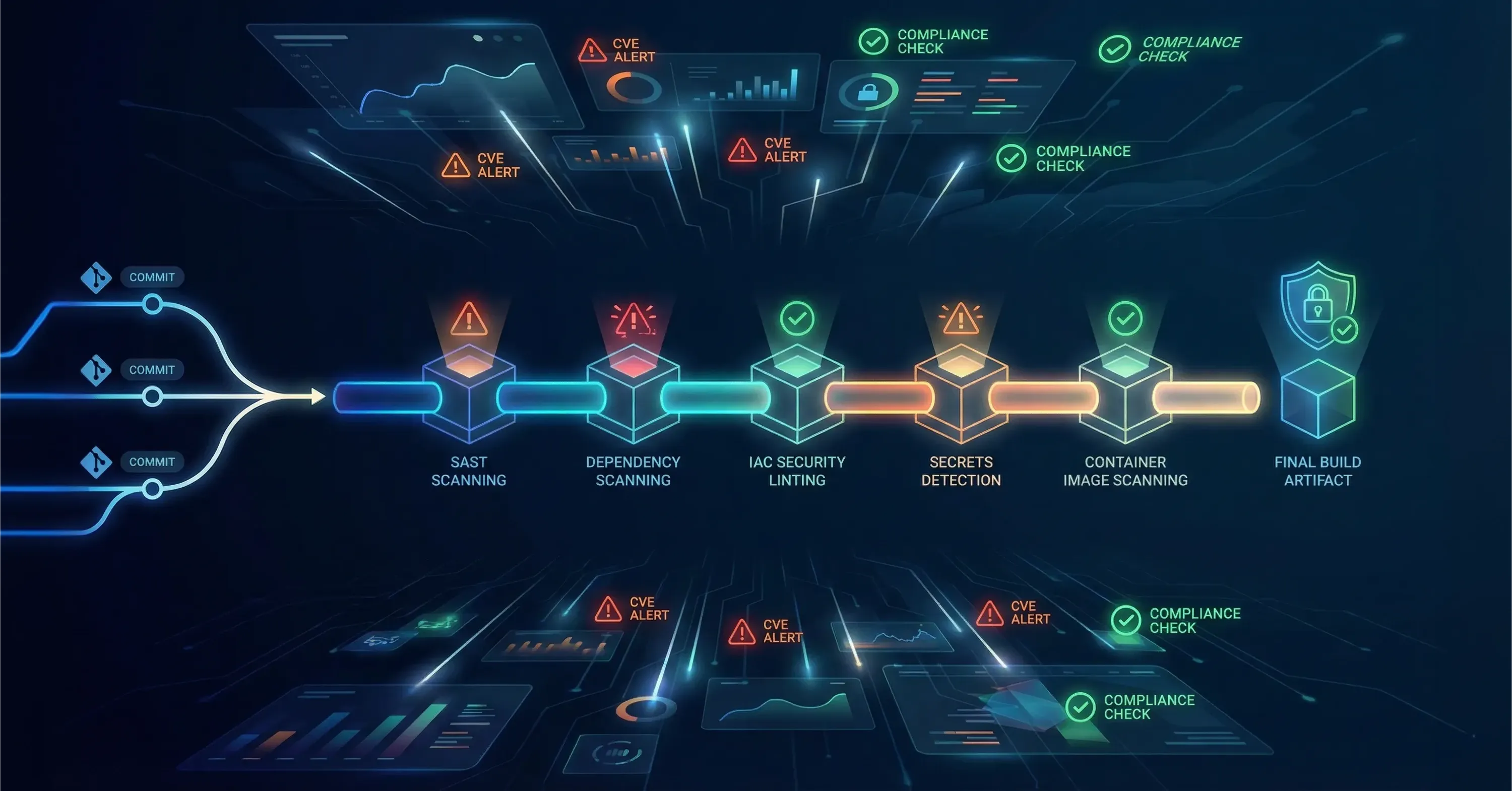

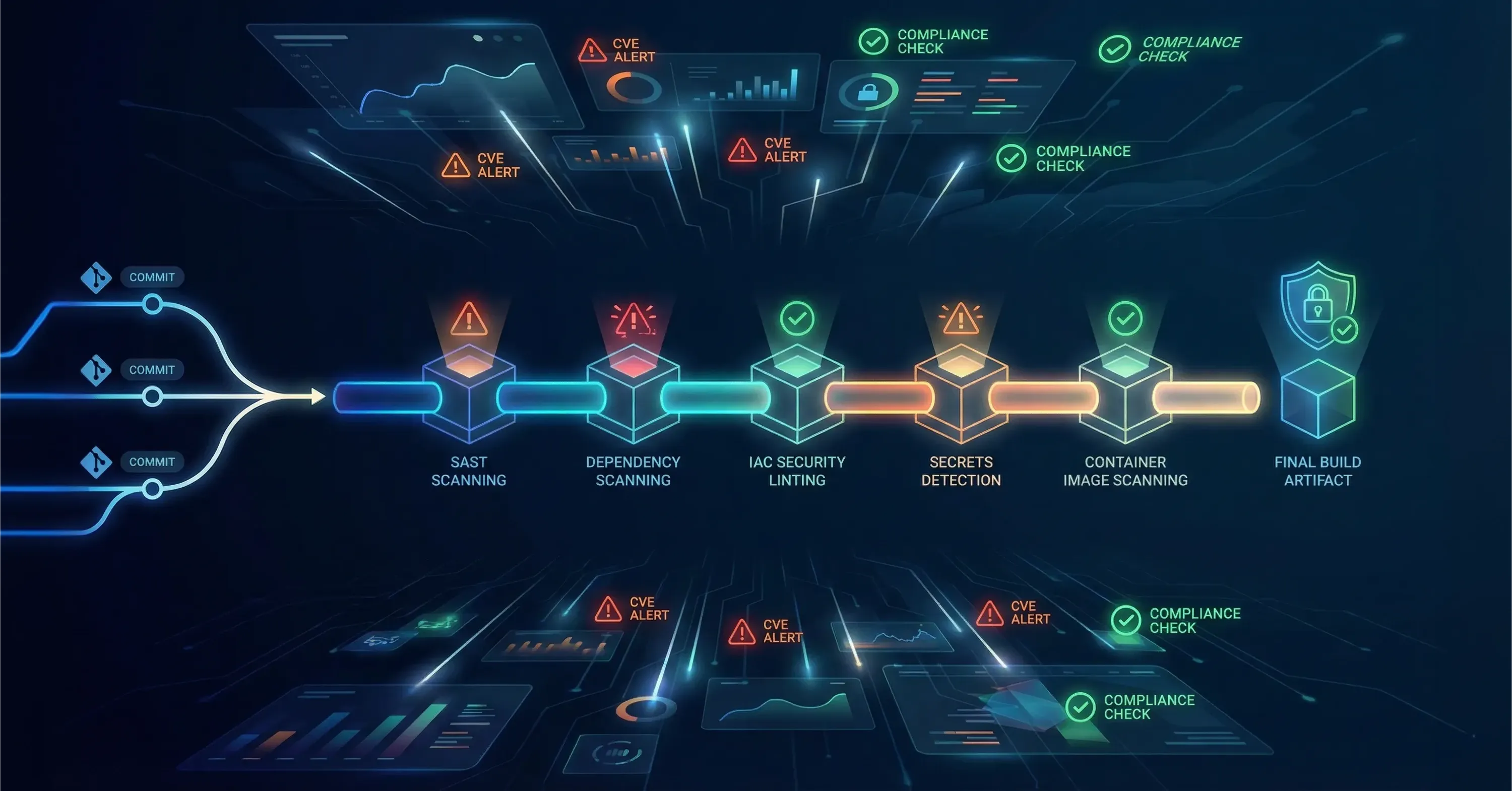

Why DevSecOps shifts security checks into the coding and build stages

-

Where automated guardrails live inside a continuous integration/continuous delivery (CI/CD) pipeline

-

How to balance performance with security controls, understand the shared responsibility model, and choose encryption methods

-

Tips for hybrid and multi-cloud setups, packed with real cases and numbers

Let us start with the bigger picture.

The Cloud Growth Boom Meets a Growing Attack Surface

Enterprise reliance on cloud is now the norm. The global market reached USD 676.29 billion in 2024 and is forecast to top USD 781.27 billion next year. That momentum invites both innovation and risk.

At the same time, security pain points multiply:

Risk is no longer an afterthought. It must live inside the delivery pipeline.

Good policies and clear responsibilities reduce exposure. Yet manual review cannot keep up with thousands of builds a month, which brings us to DevSecOps.

Real-World Example

A fintech scale-up expanded from 20 to 200 microservices in one year. Release cycles shrank to hours, but vulnerability scans were still executed once a quarter. A single misconfigured S3 bucket exposed staging data. The team later moved to real-time scanning inside Jenkins, preventing bucket exposure from recurring.

That quick story shows why shifting security left matters. Next, we examine how that looks.

DevSecOps: Moving Security Checks to Code and Build

Developers push code daily. Adding security only after deployment causes delays and missed issues. DevSecOps embeds checks where code is written and compiled, shrinking feedback loops.

Key mechanics:

-

Static application security testing (SAST) runs on every pull request

-

Dependency checks flag outdated libraries with known CVEs (Common Vulnerabilities and Exposures)

-

Infrastructure as code (IaC) templates are linted for risky defaults

-

Secrets detection prevents keys in Git commits

By integrating these tasks into the same pipeline that compiles and tests code, teams treat security bugs like functional bugs: fix them before merge.

Transitioning from isolated security to DevSecOps needs cultural change. Developers own security findings, while security engineers build reusable policies.

This shift reduces rework and builds confidence that shipped artifacts are hardened.

For a modern perspective on embedding DevSecOps and automating compliance at scale, explore Tech-Driven DevOps: How Automation is Changing Deployment.

Automation Guardrails in the CI/CD Pipeline

Automation is the only way to keep pace with cloud speed. Guardrails are rules that block unsafe actions while staying invisible when everything is correct.

Common automated guardrails:

-

Enforce branch protection so only signed commits enter main

-

Gate merges on passing security scan scores

-

Use policy-as-code engines such as Open Policy Agent to block risky IaC changes

-

Auto-tag resources that lack encryption at rest and raise alerts

-

Schedule nightly drift detection jobs to compare live cloud state against IaC

Automation succeeds when developers hardly notice it. If a build breaks, the feedback is clear and actionable.

A small upfront investment in writing policies pays dividends through reduced incidents and audit readiness.

For an in-depth, hands-on approach to building and securing automated pipelines, check out The Managed DevOps Cheat Sheet: how to cut App Development Time and Costs by 80% about devops technology.

Teams can also use managed services from a leading provider of managed IT services that bundles infrastructure management with continuous security monitoring, saving staff hours.

Real-World Example

An e-commerce firm inserted Prisma Cloud scans into CircleCI. Builds increased by 12 %, yet pipeline duration only grew two minutes. Leadership called it a “guardrail, not a speed bump,” as releases continued on time with fewer late-stage surprises.

Shared Responsibility Model: Clarifying the Line

Every major cloud vendor states that while they secure the platform, customers secure what they build on that platform. Understanding where the line sits is vital.

-

Provider responsibilities: physical data centers, core infrastructure, hypervisors, managed service maintenance

-

Customer responsibilities: data classification, identity and access management, network rules, application code, customer-side encryption keys

Confusion leads to gaps. A study revealed 29% of organizations had at least one workload publicly exposed, critically vulnerable, and highly privileged -situations often caused by unclear ownership.

Mapping every control to a role minimizes overlap or blind spots.

For a broader security strategy that seamlessly incorporates the shared responsibility model, see Cloud Managed Security: Unified Security Strategy for Cloud and Hybrid Enviroinments.

Encryption Strategies: Data in Motion and at Rest

Encryption neutralizes many threats even if attackers enter the perimeter.

Necessary layers:

-

Transport Layer Security (TLS) for all traffic between services

-

Server-side encryption for object storage; use KMS or HSM backed keys

-

Database encryption at rest with customer-managed keys when compliance in cloud demands ownership

-

Client-side encryption for highly sensitive workloads

-

Key rotation and lifecycle policies baked into the pipeline

Performance overhead is usually minimal on modern CPUs that provide hardware acceleration, yet benchmarking in staging ensures latency targets hold. Trade-offs may appear when encrypting massive analytics clusters. Teams often cache decrypted datasets in secure enclaves to keep query speed high.

To understand how encryption fits into a holistic cloud security approach that also covers sovereignty and compliance.

Risk Management and Compliance in Hybrid and Multi-Cloud

Hybrid and multi-cloud architectures are now mainstream. 84% of leaders intentionally use multiple clouds for flexibility, yet that multiplies risk.

Challenges:

Mitigation actions:

-

Central inventory of assets across providers

-

Unified identity using SSO and federated roles

-

Cross-cloud policy engines that evaluate compliance once, push everywhere

-

Encrypt inter-cloud links with VPN or dedicated connections

-

Continuous cost monitoring tools aligned with FinOps

Hybrid designs also help when leaders fear geopolitical issues; 75% have concerns about storing data globally. Data residency controls, such as region-locked buckets, reduce that worry.

For step-by-step guidance on orchestrating hybrid and multi-cloud operations, see Cloud Services and DevOps.

Quick Reference: Balancing Cloud Computing and Cloud Security

Balancing cloud computing and cloud security means embedding automated controls into every phase of the software lifecycle. Security checks shift left into coding and build stages via DevSecOps, automated guardrails enforce policies without slowing releases, clear shared responsibility maps prevent gaps, robust encryption protects data in transit and at rest, and unified governance tools manage risk across hybrid or multi-cloud estates.

Conclusion

Cloud growth will not slow. The real question is whether risk grows with it. By shifting security left, automating guardrails, clarifying responsibilities, encrypting by default, and unifying governance across hybrid and multi-cloud stacks, DevOps leaders can ship faster while sleeping better.

A balanced approach transforms security from a blocker into a quiet partner that keeps innovation on track.